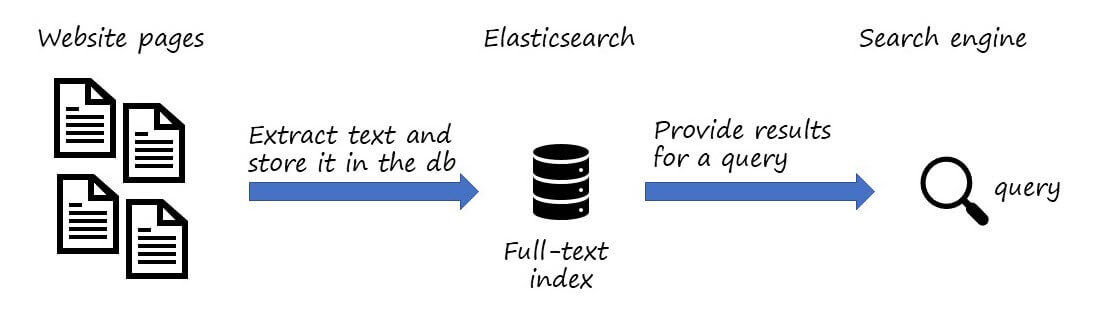

Searching for information on internal website is often not an easy task. Indeed, you can't use your favorite search engine to find information. So, you need to provide your own solution. Of course, you can't rebuild Google or Bing 😦 But, you can still provide a sufficient search engine. In this serie, I'll show how to quickly create a search engine for your internal websites using Playwright and Elasticsearch.

A search engine is a service that allows users to search for content. The final goal is to show relevant results to the user. This means you need to find and index all pages. Finding pages is clearly the easiest part. Indexing the content is much harder. But, you don't need to be as smart as the main search engines. A simple version is to use Elasticsearch to index the text content of pages. That way, you can search for words in the content of a page and elastic will find all pages with these words. Even better, it will sort the results by relevance.

Now that we know how to index the content of a page, we need to extract the content of pages and explore websites to discover all pages. Some pages may use JavaScript to load dynamic content. So, you need to use a browser to correctly load pages before indexing them. This is the purpose of Playwright. Playwright provides an API to automate Chromium, Firefox and WebKit. So, you can open a page, extract text and links to other pages. Then, you can open the other pages and extract text and links, and so on until you explored all the pages.

#Indexing pages

This project consists of crawling a website and storing content in Elasticsearch. So, the first step is to have an Elasticsearch instance running. If you don't have an Elasticsearch instance, you can use docker to quickly start a new one:

PowerShell

docker run -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" docker.elastic.co/elasticsearch/elasticsearch:7.15.0

Then, you can create a new console project and add a reference to Playwright and NEST (Elasticsearch client). For Playwright, you also need to use the CLI to install the browsers.

Shell

dotnet new console

dotnet add package Microsoft.Playwright

dotnet add package NEST

dotnet tool update --global Microsoft.Playwright.CLI

dotnet build

playwright install

Then, you can use the following code:

C#

using Microsoft.Playwright;

var rootUrl = new Uri("https://example.com");

var urlsToProcess = new Queue<Uri>();

urlsToProcess.Enqueue(rootUrl);

// Keep a list of all visited urls,

// so we don't process the same url twice

var visitedUrls = new HashSet<Uri>();

// Initialize Elasticsearch

var elasticClient = new Nest.ElasticClient(new Uri("http://localhost:9200"));

var indexName = "example-pages";

await elasticClient.Indices.CreateAsync(indexName);

// Initialize Playwright

using var playwright = await Playwright.CreateAsync();

await using var browser = await playwright.Chromium.LaunchAsync();

await using var browserContext = await browser.NewContextAsync();

var page = await browserContext.NewPageAsync();

// Process urls

while (urlsToProcess.TryDequeue(out var uri))

{

if (!visitedUrls.Add(uri))

continue;

Console.WriteLine($"Indexing {uri}");

// Open the page

var pageGotoOptions = new PageGotoOptions { WaitUntil = WaitUntilState.NetworkIdle };

var response = await page.GotoAsync(uri.AbsoluteUri, pageGotoOptions);

if (response == null || !response.Ok)

{

Console.WriteLine($"Cannot open the page {uri}");

continue;

}

// Extract data from the page

var elasticPage = new ElasticWebPage

{

Url = (await GetCanonicalUrl(page)).AbsoluteUri,

Contents = await GetMainContents(page),

Title = await page.TitleAsync(),

};

// Store the data in Elasticsearch

await elasticClient.IndexAsync(elasticPage, i => i.Index(indexName));

// Find all links and add them to the queue

// note: we do not want to explorer all the web,

// so we filter out url that are not from the rootUrl's domain

var links = await GetLinks(page);

foreach (var link in links)

{

if (!visitedUrls.Add(link) && link.Host == rootUrl.Host)

urlsToProcess.Enqueue(link);

}

}

// Search for <link rel=canonical href="value"> to only index similar pages once

async Task<Uri> GetCanonicalUrl(IPage page)

{

var pageUrl = new Uri(page.Url, UriKind.Absolute);

var link = await page.QuerySelectorAsync("link[rel=canonical]");

if (link != null)

{

var href = await link.GetAttributeAsync("href");

if (Uri.TryCreate(pageUrl, href, out var result))

return result;

}

return pageUrl;

}

// Extract all <a href="value"> from the page

async Task<IReadOnlyCollection<Uri>> GetLinks(IPage page)

{

var result = new List<Uri>();

var anchors = await page.QuerySelectorAllAsync("a[href]");

foreach (var anchor in anchors)

{

var href = await anchor.EvaluateAsync<string>("node => node.href");

if (!Uri.TryCreate(href, UriKind.Absolute, out var url))

continue;

result.Add(url);

}

return result;

}

// The logic is to search for the <main> elements or any element

// with the attribute role="main"

// Also, we use the non-standard "innerText" property as it considers

// the style to remove invisible text.

async Task<IReadOnlyCollection<string>> GetMainContents(IPage page)

{

var result = new List<string>();

var elements = await page.QuerySelectorAllAsync("main, *[role=main]");

if (elements.Any())

{

foreach (var element in elements)

{

var innerText = await element.EvaluateAsync<string>("node => node.innerText");

result.Add(innerText);

}

}

else

{

var innerText = await page.InnerTextAsync("body");

result.Add(innerText);

}

return result;

}

[Nest.ElasticsearchType(IdProperty = nameof(Url))]

public sealed class ElasticWebPage

{

[Nest.Keyword]

public string? Url { get; set; }

[Nest.Text]

public string? Title { get; set; }

[Nest.Text]

public IEnumerable<string>? Contents { get; set; }

}

You can now run the code by using dotnet run. The code will crawl the website and index all the pages.

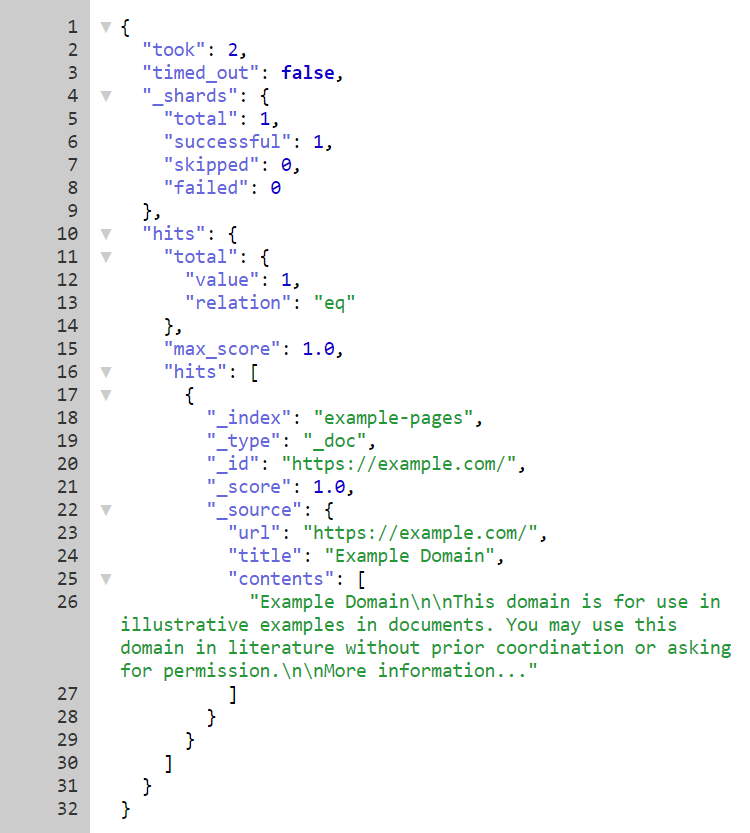

You can validate the content of the database by using kibana or by navigating to http://localhost:9200/example-pages/_search. The latter should show a few documents from the index:

#Searching data in Elasticsearch

This project is a web application that uses the Elasticsearch index to show results.

The first step is to create a new web application and add a reference to NEST (Elasticsearch client):

PowerShell

dotnet new razor

dotnet add package NEST

Then, you can replace the content of index.razor with the following content:

Razor

@page

@using Microsoft.AspNetCore.Html

@using Nest

<h1>My Search Engine</h1>

<form method="get">

<div class="searchbox">

<input type="search" name="search" placeholder="query..." value="@Search" />

<button type="submit">🔍</button>

</div>

</form>

<ol class="results">

@foreach (var match in matches)

{

<li class="result">

<h2 class="result_title"><a href="@match.Url" target="_blank">@match.Title</a></h2>

<div class="result_attribution">

<cite>@match.Url</cite>

</div>

<p>@match.Highlight</p>

</li>

}

</ol>

@functions {

private List<MatchResult> matches = new();

[FromQuery(Name = "search")]

public string? Search { get; set; }

public async Task OnGet()

{

if (Search == null)

return;

var settings = new ConnectionSettings(new Uri("http://localhost:9200"))

.DefaultIndex("example-pages");

var client = new ElasticClient(settings);

var result = await client.SearchAsync<ElasticWebPage>(s => s

.Query(q => q

.Bool(b =>

b.Should(

bs => bs.Match(m => m.Field(f => f.Contents).Query(Search)),

bs => bs.Match(m => m.Field(f => f.Title).Query(Search))

)

)

)

.Highlight(h => h

.Fields(f => f

.Field(f => f.Contents)

.PreTags("<strong>")

.PostTags("</strong>")))

);

var hits = result.Hits

.OrderByDescending(hit => hit.Score)

.DistinctBy(hit => hit.Source.Url);

foreach (var hit in hits)

{

// Merge highlights and add the match to the result list

var highlight = string.Join("...", hit.Highlight.SelectMany(h => h.Value));

matches.Add(new(hit.Source.Title!, hit.Source.Url!, new HtmlString(highlight)));

}

}

record MatchResult(string Title, string Url, HtmlString Highlight);

[Nest.ElasticsearchType(IdProperty = nameof(Url))]

public sealed class ElasticWebPage

{

public string? Url { get; set; }

public string? Title { get; set; }

public IEnumerable<string>? Contents { get; set; }

}

}

You can improve the UI by adding a few CSS rules:

CSS

h1, h2, h3, h4, h5, h6, p, ol, ul, li {

border: 0;

border-collapse: collapse;

border-spacing: 0;

list-style: none;

margin: 0;

padding: 0;

}

.searchbox {

width: 545px;

border: gray 1px solid;

border-radius: 24px;

}

.searchbox button {

margin: 0;

transform: none;

background-image: none;

background-color: transparent;

width: 40px;

height: 40px;

border-radius: 50%;

border: none;

cursor: pointer;

}

input {

width: 499px;

font-size: 16px;

margin: 1px 0 1px 1px;

padding: 0 10px 0 19px;

border: 0;

max-height: none;

outline: none;

box-sizing: border-box;

height: 44px;

vertical-align: top;

border-radius: 6px;

background-color: transparent;

}

.results {

color: #666;

}

.result {

padding: 12px 20px 0;

}

.result_title > a {

font-size: 20px;

line-height: 24px;

font: 'Roboto',Sans-Serif;

}

.result_attribution {

padding: 1px 0 0 0;

}

.result_attribution > cite {

color: #006621;

}

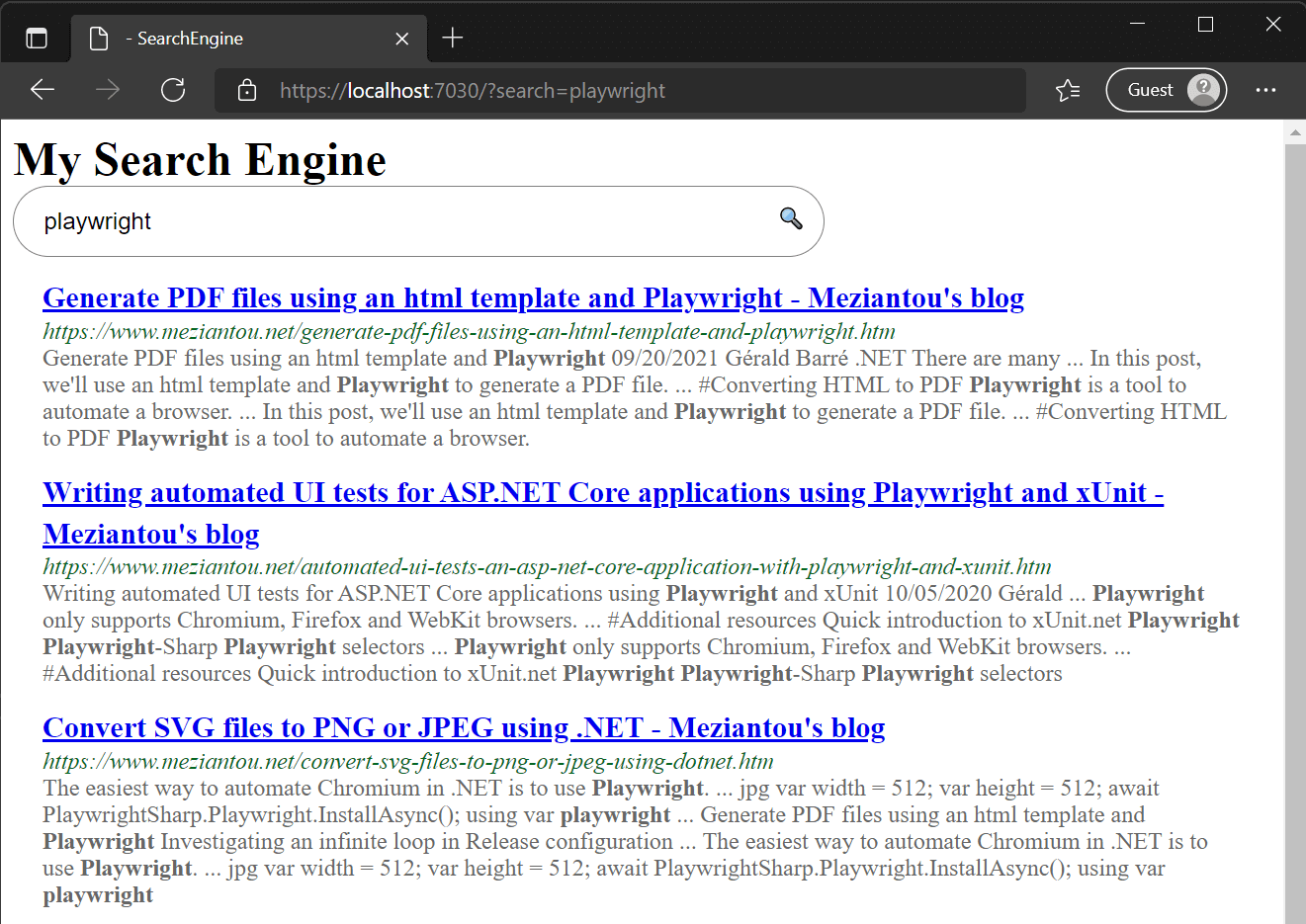

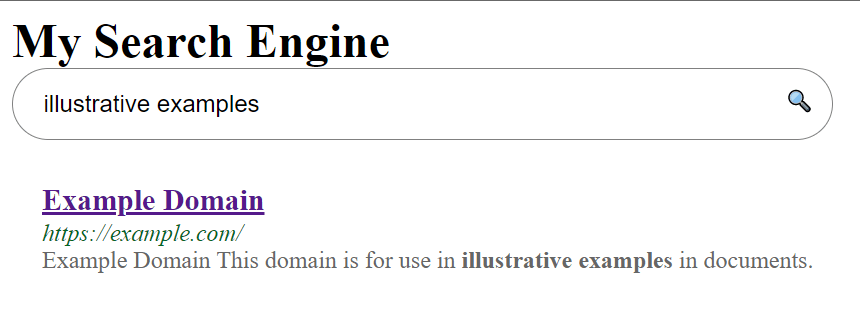

You can run the web application by using dotnet run and write your first query:

#Conclusion

In a few dozen lines of code, you can create a search engine for your websites thanks to Paywright and Elasticsearch. This is not a Google replacement, but the results may be good enough for your needs. Note that you can extract more data such as the page description, links, or headers. Then, you can weight each field to get better results. Another improvement would be to use sitemap and feeds to crawl websites, so you don't miss an important page.

Notes:

- If the websites you want to index don't rely on any JS scripts nor advanced feature, you can replace Playwright with Anglesharp which should be faster

- The sample on GitHub is faster as it indexes pages in parallel, and extract more data

#Additional resources

Do you have a question or a suggestion about this post? Contact me!